#TALK

PyCon.DE 2018

PyCon.DE 2018 is over. Second time in a row we organized it in ZKM Karlsruhe. Next year PyCon.DE will move to Berlin. Read More

How to Organize a PyCon.DE

Some notes on how to organize a conference like PyCon.DE Read More

PyCon.DE 2017 and PyData Karlsruhe

The venue setup @zkmkarlsruhe for #PyCon.DE 2017 and PyData Karlsruhe is done. We are ready for liftoff tomorrow. Read More

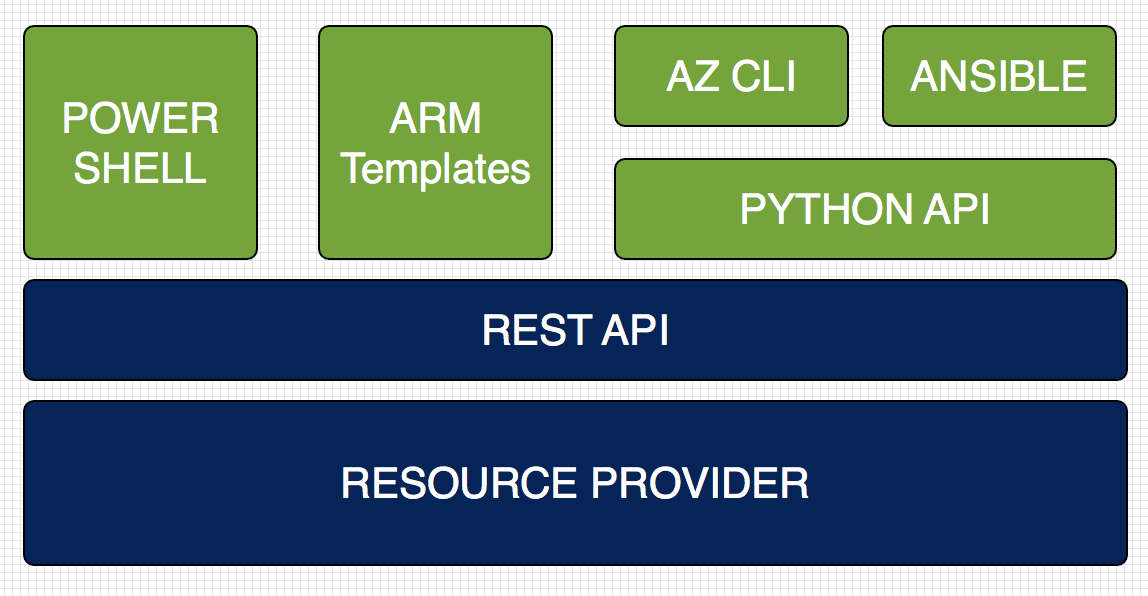

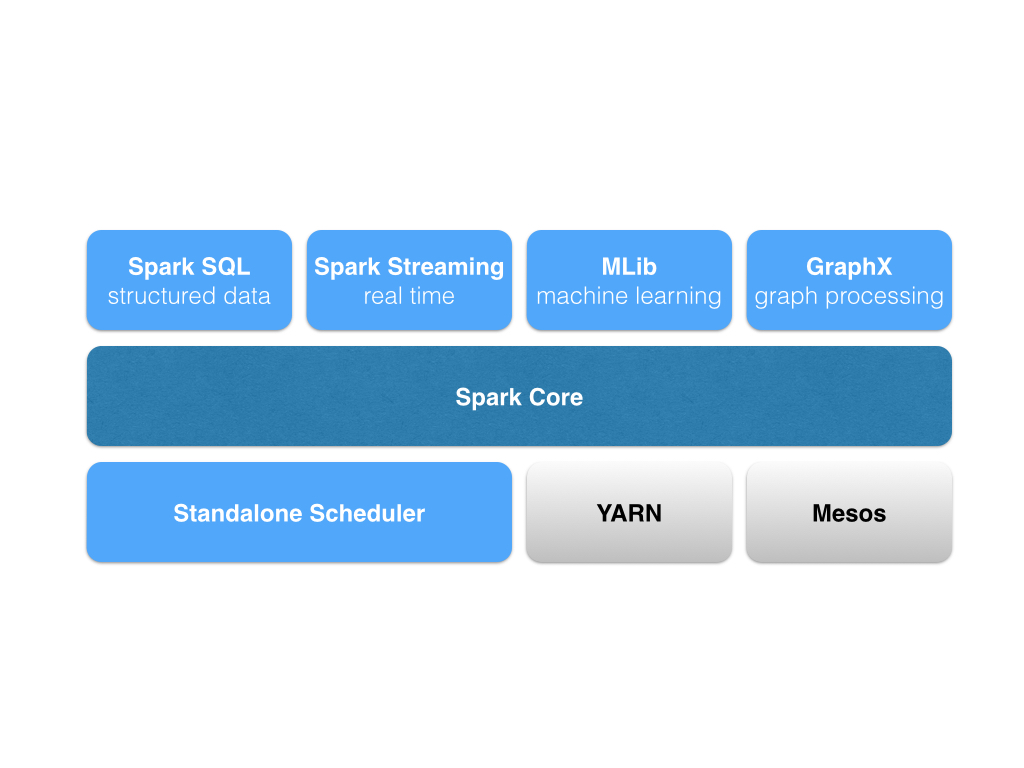

EuroPython 2017 - Infrastructure as Python Code - Run Your Services on Microsoft Azure

Using Infrastructure-as-Code principles with configuration through machine processable definition files in combination with the adoption of cloud computing provides faster feedback cycles in development/testing and less risk in deployment to production. Read More

PyConWeb 2017 Munich - Deploying Your Web Services on Microsoft Azure

This talk will give an overview on how to deploy web services on the Azure Cloud with different tools like Azure Resource Manager Templates, the Azure SDK for Python and the Azure module for Ansible and present best practices learned while moving a company into the Azure Cloud. Read More

PyCon.DE — 25–27 October 2017, Karlsruhe

The next PyCon.DE will be from 25-27th October 2017 at the ZKM - center for art and media in Karlsruhe/Germany. Read More

EuroPython 2014 - Log Everything with Logstash and Elasticsearch

When your application grows beyond one machine you need a central space to log, monitor and analyze what is going on. Logstash and elasticsearch store your logs in a structured way. Kibana is a web fronted to search and aggregate your logs. Read More